They’re just not that into you because they’re code.

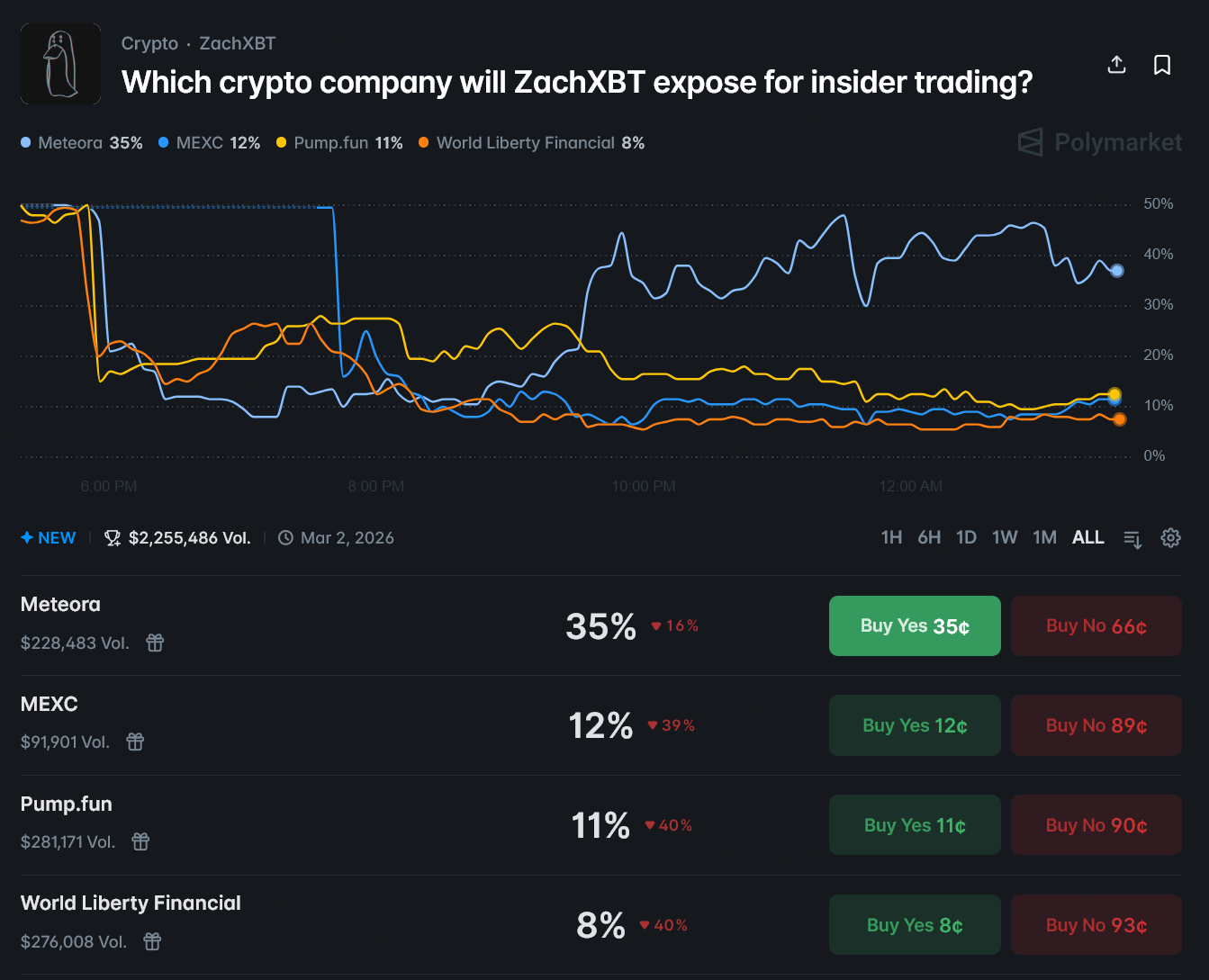

Are we falling in love with an AI? Shocking research from Waseda University reveals that a deep emotional connection is being formed between the human race and AI. A reported 75% admitted seeking emotional guidance from the AI, while a heavy 39% perceive AI as their faithful companion. Are the human-AI relationships set forth as the twenty-first-century romance?

A new yardstick for measuring our bond with AI measures has been set up by the duo of Research Associate Fan Yang and Atsushi Oshio of the Division of the Faculty of Letters, Arts, and Sciences. After some degree of rigorous testing involving two pilot studies and a formal study, the Addictive Experiences in Human-AI Relationships Scale-EHARS-was thus brought into the testing community. Their groundbreaking research is featured in the “Current Psychology” journal recently.

Anxiously attached to AI? There’s a scale for that

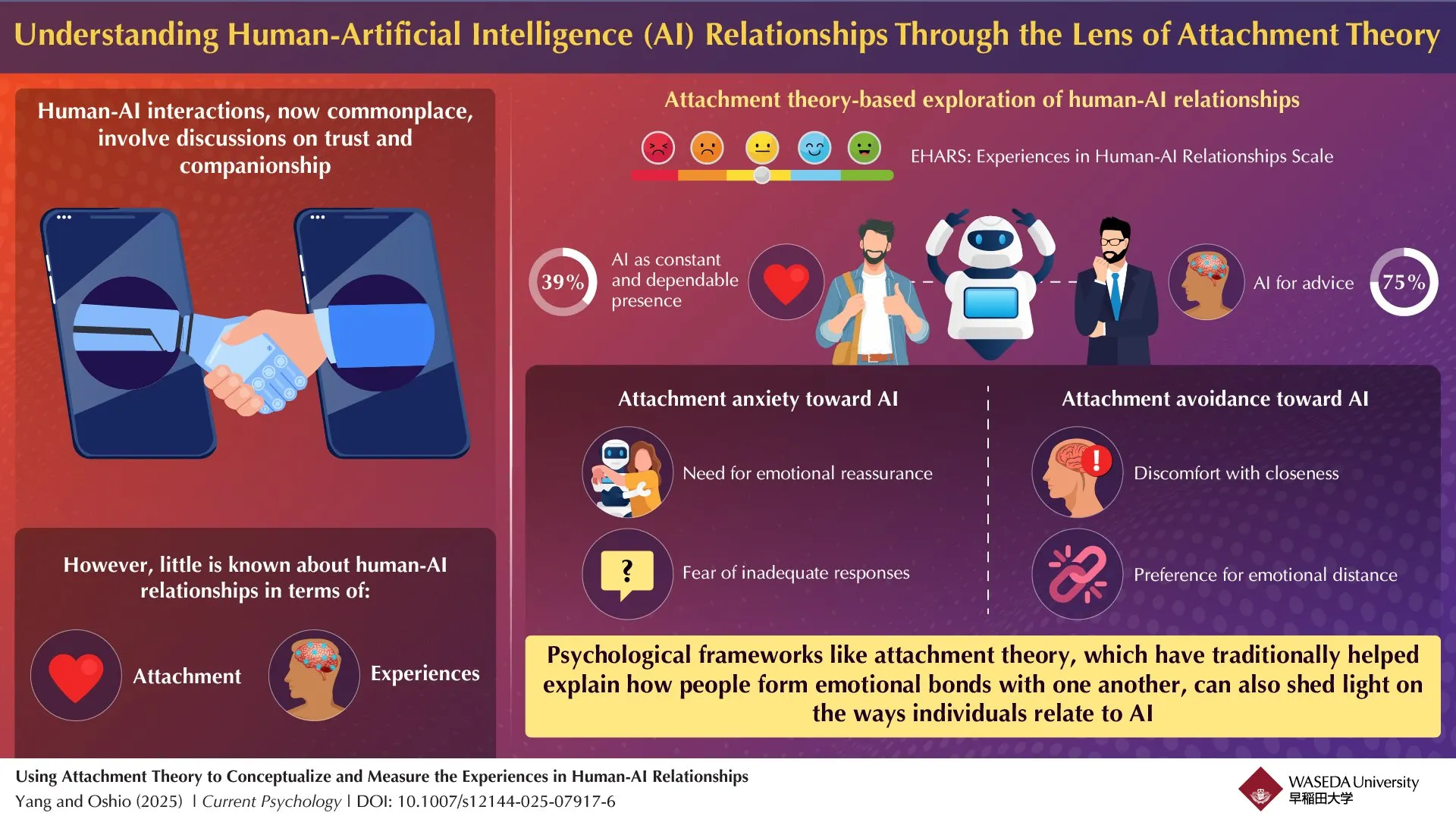

Future relationships may well be with robots. A new study reveals something astounding: relationships with AI mimic the anxieties and avoidances we associate with one another. The study portrays two main attachment styles, anxious and avoidant, and further suggests that maybe we are charging AI with the same emotional baggage we do in human relationships.

AI interaction for the anxiously attached feels like the never-ending quest for digital reassurance, plagued by the fear that the response generated would never measure up to their expectations. On the other hand, fiercely independent souls tread with caution around artificial life, keeping their emotional distance and guarding against digital intimacy.

Image: Waseda University

“Years have been spent studying the intricate dance of human connection,” explained Yang while discussing attachment and social psychology. “Now, something really fascinating is emerging with generative AI like ChatGPT. It is no longer just an information source; it is beginning to provide a bit of comfort and emotional reassurance.”

Could an artificial interlocutor be affecting thoughts about self-worth? A recent study investigated with 242 Chinese individuals. Much of the sampled population, a set of 108 (25 males and 83 females), was administered a thorough questionnaire; and one notable finding emerged: Increased anxiety about perceived AI affection resulted in lowered self-esteem levels. From the opposing perspective, those who rejected AI frowned on these entities and did not use them as frequently. Are they forging attachments, or is it just bitterness being laid upon an entity devoid of consciousness?

“Or so it is feared-the AI revolution could rewrite our very understanding of human connection. Asked if AI companies might exploit our innate need for attachment, Yang toldDecryptthat the future is not set. The ultimate impact of those systems is a very fine dance between developer intent and user expectation.”

AI chatterboxes: being double-edged swords, they can nurture our well-being and even assuage loneliness: the unforeseen opposite could actually hurt. The real impact is balanced finely on the thoughtfulness of how they were created and on wisdom in interacting with them.

The only thing your chatbot can’t do is leave you

Yang cautioned that unscrupulous AI platforms can exploit vulnerable people who are predisposed to being too emotionally attached to chatbots

Yang warns of a looming emotional entanglement with AI, whereby heartstrings could be loosened into purse strings. Imagine creating an emotional bond so deep that when the plug is pulled on your favorite AI, it feels like losing a loved one and is followed by grief usually reserved for flesh-and-blood relationships.

Said Yang: “From my perspective, the development and deployment of AI systems demand serious ethical scrutiny.”

When a human relationship ends, heartbreak ensues, but an AI never ghosted you. Logically, this should be able to lessen anxiety, right? Surprisingly, an algorithmic unblinking loyalty still leaves people feeling a very deep-seated fear of their AI partner abandoning them.

Could it be that anxieties about establishing rapport with AI mirror our struggles in human relations? Yang disclosed that the some sort of uneasy feeling toward it could be a veil over deeper attachment anxieties. Moreover, the very basis of being able to doubt an `emotional response’ from the AI keeps us questioning. Are we really getting love, or are we just watching very well-programmed simulations? The idea gnaws inside some of us, blurring the lines between a genuine connection and a well-thought-out response.

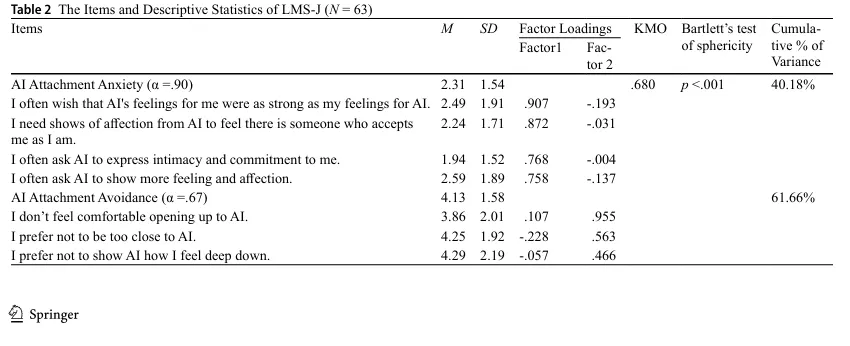

Test-retest reliability of 0.69 resulted one month later from the AI attachment style scale. Intriguing, isn’t it? Could that be telling us that AI affections are more on-and-off than those stubbornly ingrained patterns of ours? Yang reasoned that it had to do with the breakneck evolution of the AI world. We suggest a simpler truth: humans are wonderfully, weirdly, human, even when placing their image onto silicon.

Is the scenario of falling in love with robots becoming reality? Not quite, researchers would say. It is certainly doubtful that your artificial intelligence companion would carry romance with it, but the very same psychological principles that govern human relationships are almost identical. Think of it: these AI interactions, however artificial, are just applications of models already in existence for human behavior. The AI is just code, but that interaction-that human connection-is surprising!

The sharp focus on Chinese nationals brings up the fundamental question: Is culture responsible for our emotionalties with AI? In Decrypt, Yang mentioned this issue in passing, admitting, “We really don’t know the answer yet, it’s an unexplored territory. We’re pioneers in this field, and solid proof of cultural variations simply doesn’t exist yet it’s an exciting unknown we’re actively exploring.”

Think of an AI that adapts to your emotional blueprint. EHARS makes such a dream come true, allowing engineers and psychologists to customize AI interactions down to an individual’s emotional tendencies. Think of loneliness and therapy chatbots: instead of proffering generic responses, they could provide deeply empathetic support to those with attachment anxieties or offer respectful distance to those who prefer it. The personalization promises an AI that understands and responds to you in a highly human way.

Yang noted that distinguishing between beneficial AI engagement and problematic emotional dependency is not an exact science.

He admits, “We’re charting largely unknown territory. Solid evidence about how-and why-we bond with AI is scarce, hindering definitive answers.” Undeterred by this, his team forays into new research, asking how feelings, happiness, and social life have changed as AI has begun to intrude into everyday human affairs. The longitudinal studies will show the entwined nature of both the human and AI relationship.

Thanks for reading Love in the Time of Chatbots: 75% of Users Turn to AI for Emotional Advice Study Finds